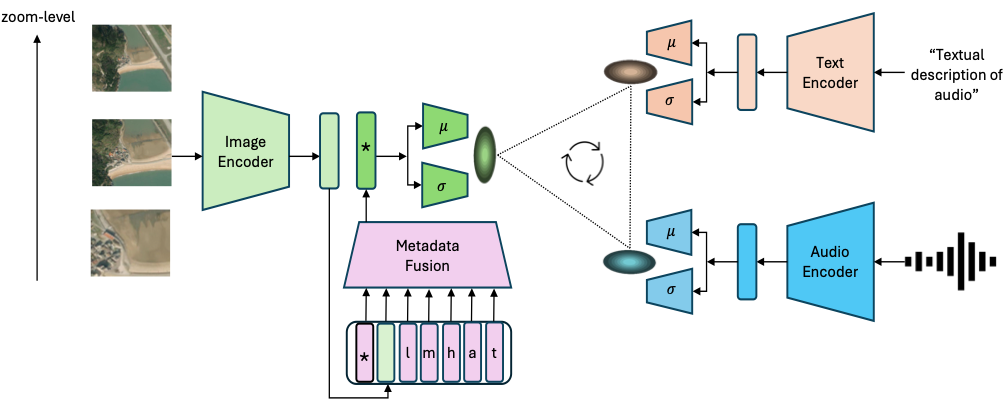

Method

Our proposed framework, Probabilistic Soundscape Mapping (PSM), combines image, audio, and text encoders to learn a probabilistic joint representation space. Metadata, including geolocation (l), month (m), hour (h), audio-source (a), and caption-source (t), is encoded separately and fused with image embeddings using a transformer-based metadata fusion module. For each encoder, 𝜇 and 𝜎 heads yield probabilistic embeddings, which are used to compute probabilistic contrastive loss.

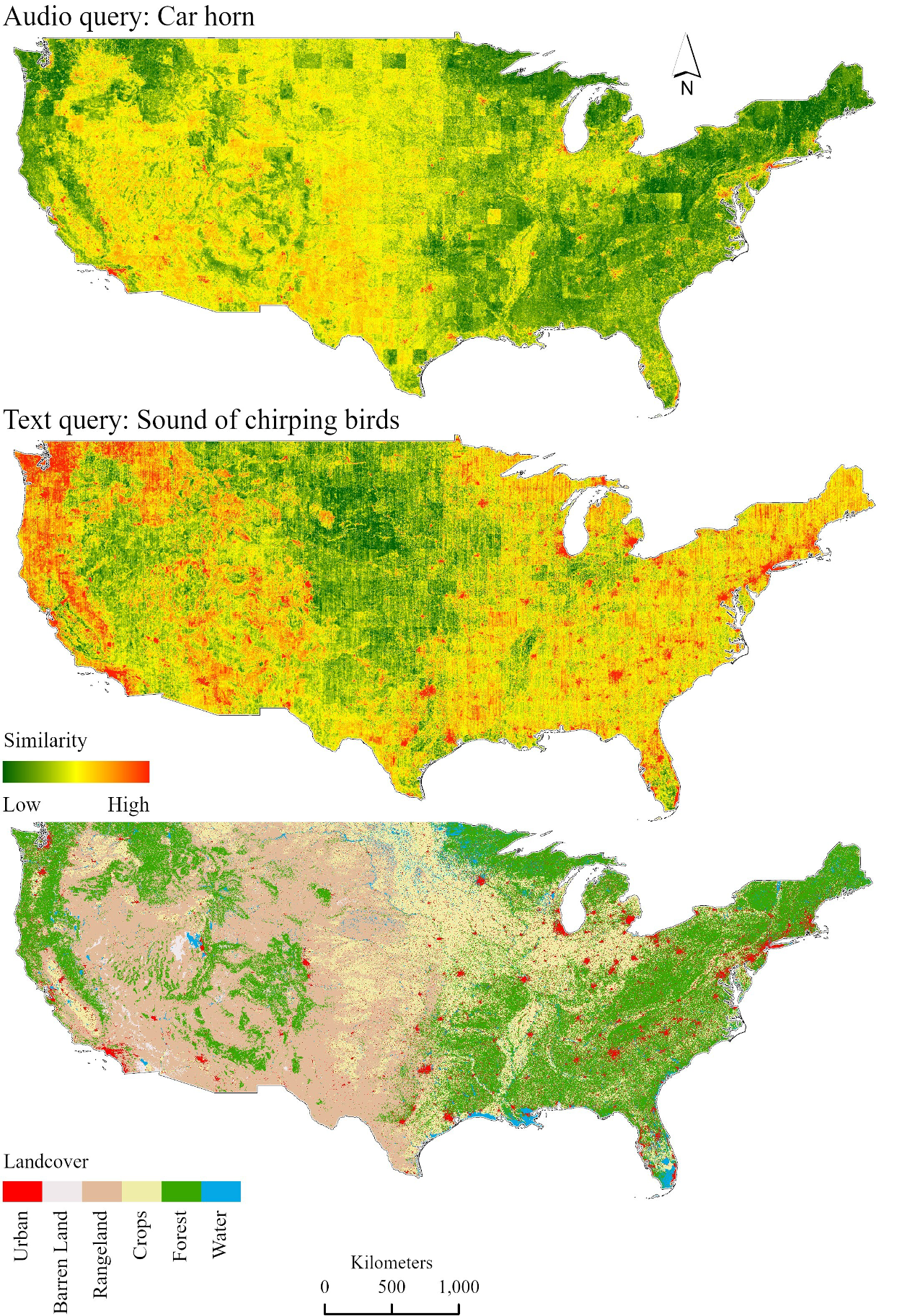

Soundscape Maps

Two soundscape maps of the continental United States, generated from Bing image embeddings obtained from PSM, accompanied by a land cover map for reference.

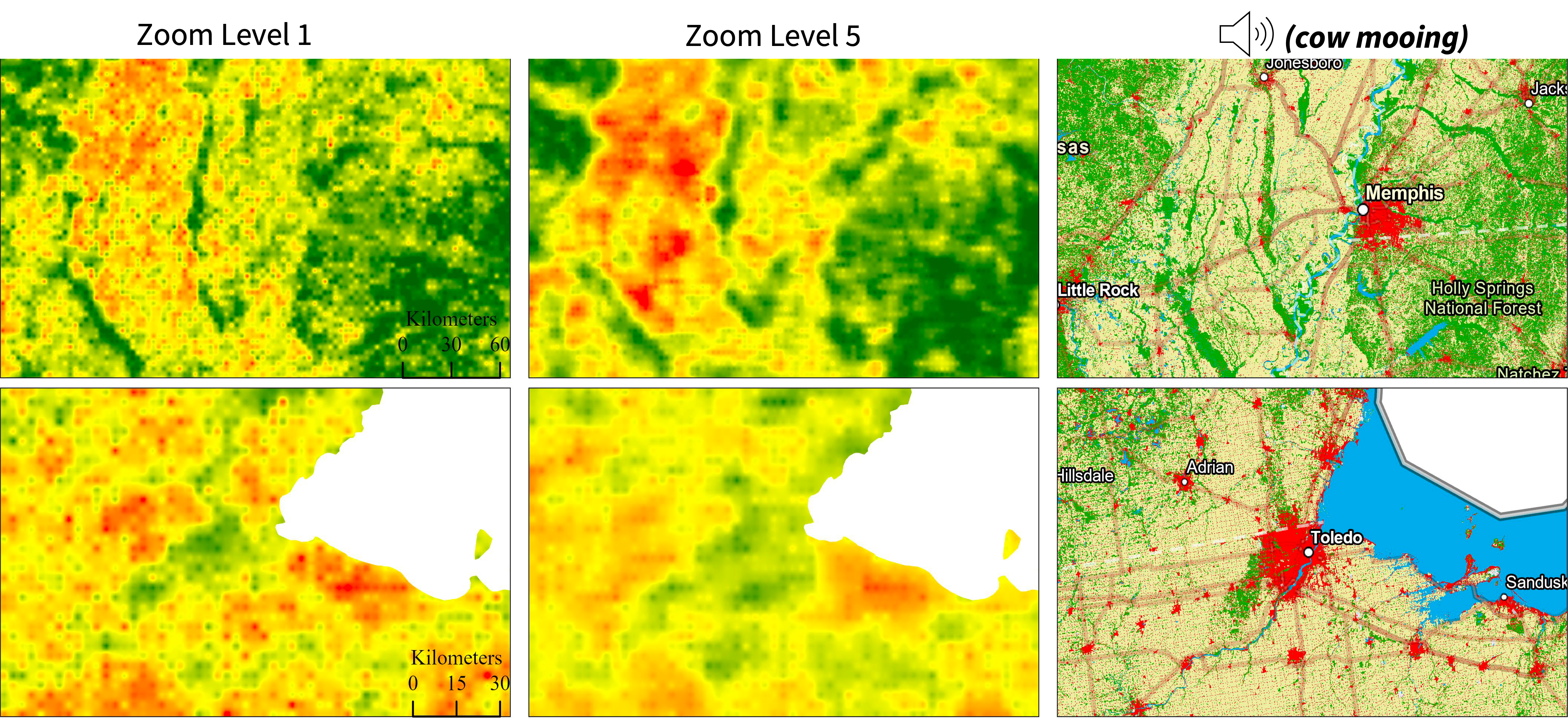

Soundscape maps over smaller geographic areas using PSM embeddings from Sentinel-2 satellite imagery at two zoom levels.

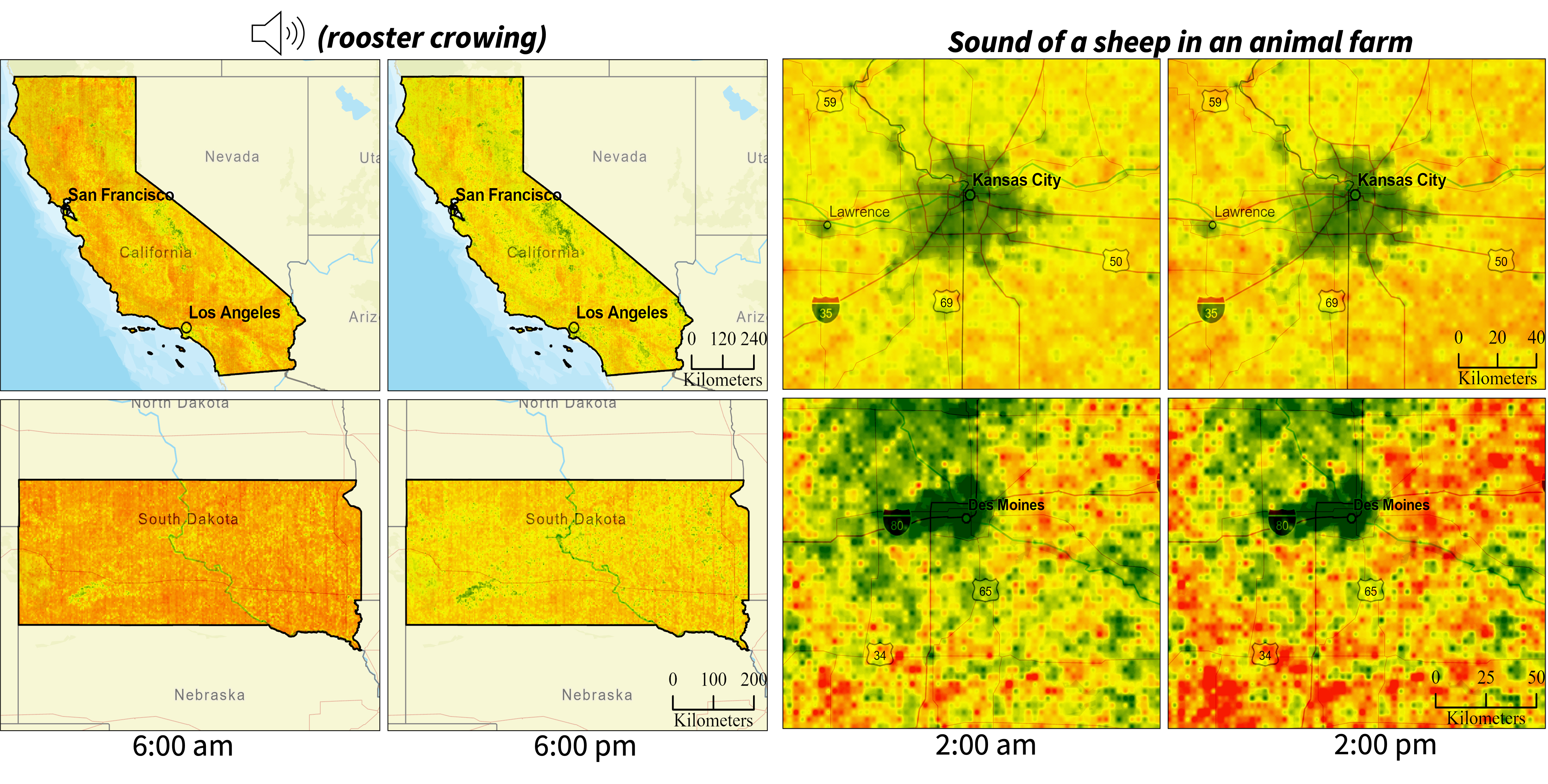

Temporally dynamic soundscape maps created by querying our model with audio and text query.

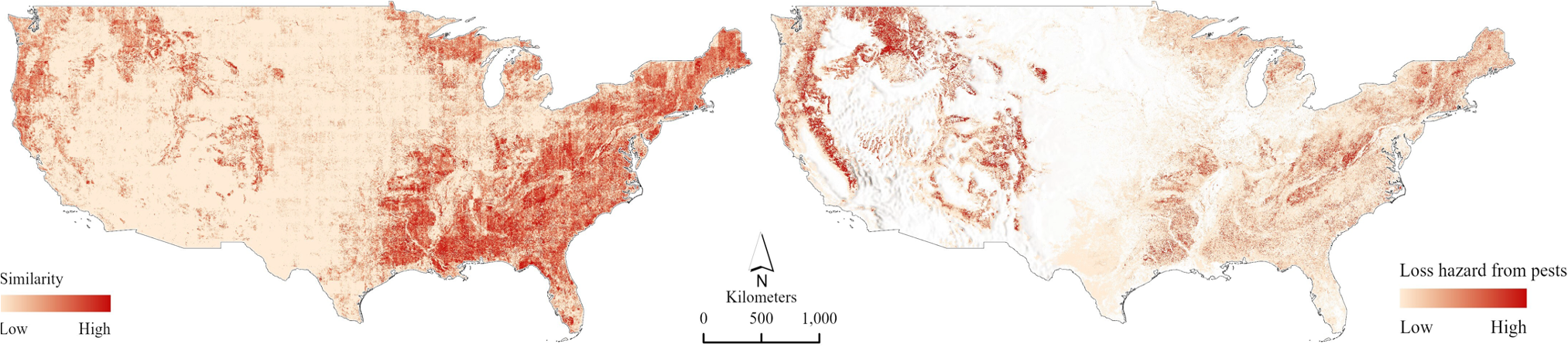

A soundscape map of the USA for a text query: sound of insects compared with a reference map indicating the risk of pest-related hazards.

Satellite Image to Sound Retrieval

BibTeX

@inproceedings{khanal2024psm,

title = {PSM: Learning Probabilistic Embeddings for Multi-scale Zero-Shot Soundscape Mapping},

author = {Khanal, Subash and Xing, Eric and Sastry, Srikumar and Dhakal, Aayush and Xiong, Zhexiao and Ahmad, Adeel and Jacobs, Nathan},

year = {2024},

month = nov,

booktitle = {Association for Computing Machinery Multimedia (ACM Multimedia)},

}Follow more works from our lab: The Multimodal Vision Research Laboratory (MVRL)